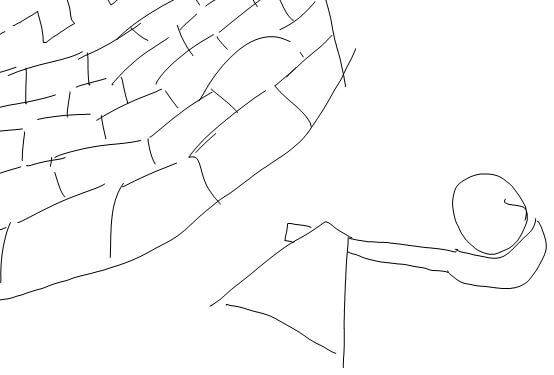

Security has always been a vexing problem for us. Back in earlier times, great castles and walls were built to keep out unfriendly types. Technologies and tactics such as catapults came along to reduce the effectiveness of these defenses.

Security has always been a vexing problem for us. Back in earlier times, great castles and walls were built to keep out unfriendly types. Technologies and tactics such as catapults came along to reduce the effectiveness of these defenses.

What prompted this thought was an article I came across, The new paradigm for utility information security: assume your security system has already been breached in Asian Power magazine. The author shares:

Basically, there has been a standard practice if you will for many years where the “fortress” approach was the norm –- or paradigm — for enterprise and energy company security. This applied to physical security and cyber security. The fortress concept included a strict perimeter – usually defined by gates, guards, and firewalls.

In this approach, the assumption was that all the attackers were on the outside of the perimeter and that the strong perimeter would prevent the attacker from not only entering the walls but they could not access the crown jewels (aka data) because it was housed within layers of more security barriers that included more walls, more guards, and more firewalls and maybe a moat.

I ran this article by Emerson’s Bob Huba, whom you may recall from earlier cyber-security related posts. He agreed that this threat from the inside was very real. What this means is that a hacker can use social networks, Google and other methods to discover personal details about a high-level or other person in the company. Executives are especially vulnerable as they tend to be in the news or in annual reports and easier to discover personal details.

The hacker can then craft a very personal message to the executive perhaps claiming a mutual friend or mutual interest that makes the hacker seem more harmless and maybe even somebody the executive has met. Upon opening the message, the attack can occur by perhaps opening the attachment or following a link to a malicious site. Traditional virus and firewall filters can prevent many but not all attacks.

It seems that the only mitigation to this (beyond deep scanning emails for bad stuff) is to train employees about this threat. The easiest filter is to check the sender’s email address, and if it is a generic account such as Gmail, Yahoo, Hotmail, or other non-business email address, then this would be a trigger to either permanently delete the message without opening it (or only preview it in the preview pane) or use the email junk filtering process to disable the possible “bad stuff” in the message while figuring out if it is real or bogus. The junk filtering will likely already handle the spoofed email case where the address seen in the email is different from the actual email server that sends the email. Bob notes that he deletes emails from people he does not recognize or already trust. If it is important, folks will find another way to contact him.

The bottom line is that there is a personal element to security and continued employee communications about the threats and how to best deal with them is an important part of any security program. This communications element should be part of the planning process for the team in charge of overall security risk mitigation.